EpiDepth: A Real-Time Monocular Dense-Depth Estimation Pipeline Using Generic Image Rectification

Proc. SPIE 12099, Geospatial Informatics XII, 2022

Abstract

Because of weight, power, and cost constraints, most unmanned aircraft systems (UAS) contain monocular camera systems. Real-time structure from motion (SfM) algorithms are required for monocular UAS systems to sense and autonomously navigate 3D environments. The SfM algorithm must be designed to work near real-time and handle the wide variety of possible extrinsic parameters produced by UAS image pairs. Common rigid epipolar rectification techniques (homography-based rectification) do not accurately model epipolar geometries with epipoles close to or contained within the image bounds. Common UAS movement types, translation along lens axis, tight radial turns, circular patterns with GPS locked camera focus, can all produce epipolar geometries with epipoles inside the camera frame. Using a generalized epipolar rectification technique, all extrinsic UAS movement types can be handled, and optimized image block matching techniques can be used to produce disparity/depth maps.

The majority of UASs contain GPS/IMU/magnetometer modules. These modules provide absolute camera extrinsic parameters for every image. The essential and fundamental matrices between image pairs can be calculated from these absolute extrinsics. SfM is performed, depth images are produced, and the camera space pixel values can be projected as point clouds in three-dimensional space. These point clouds provide scene understanding that can be used for autonomous reasoning systems.

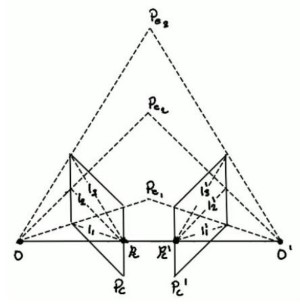

Media